OmniNxt

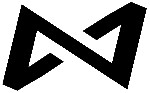

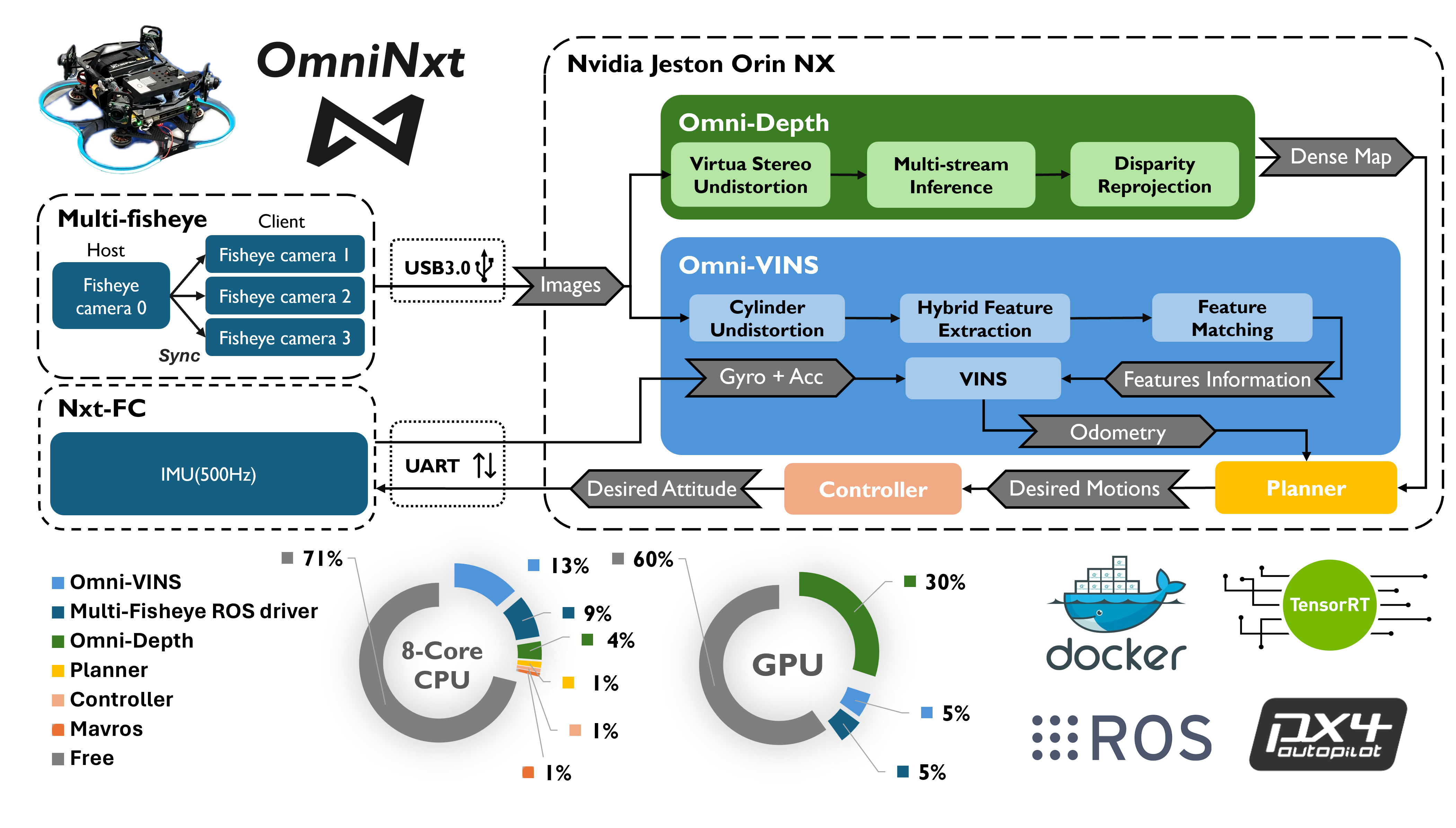

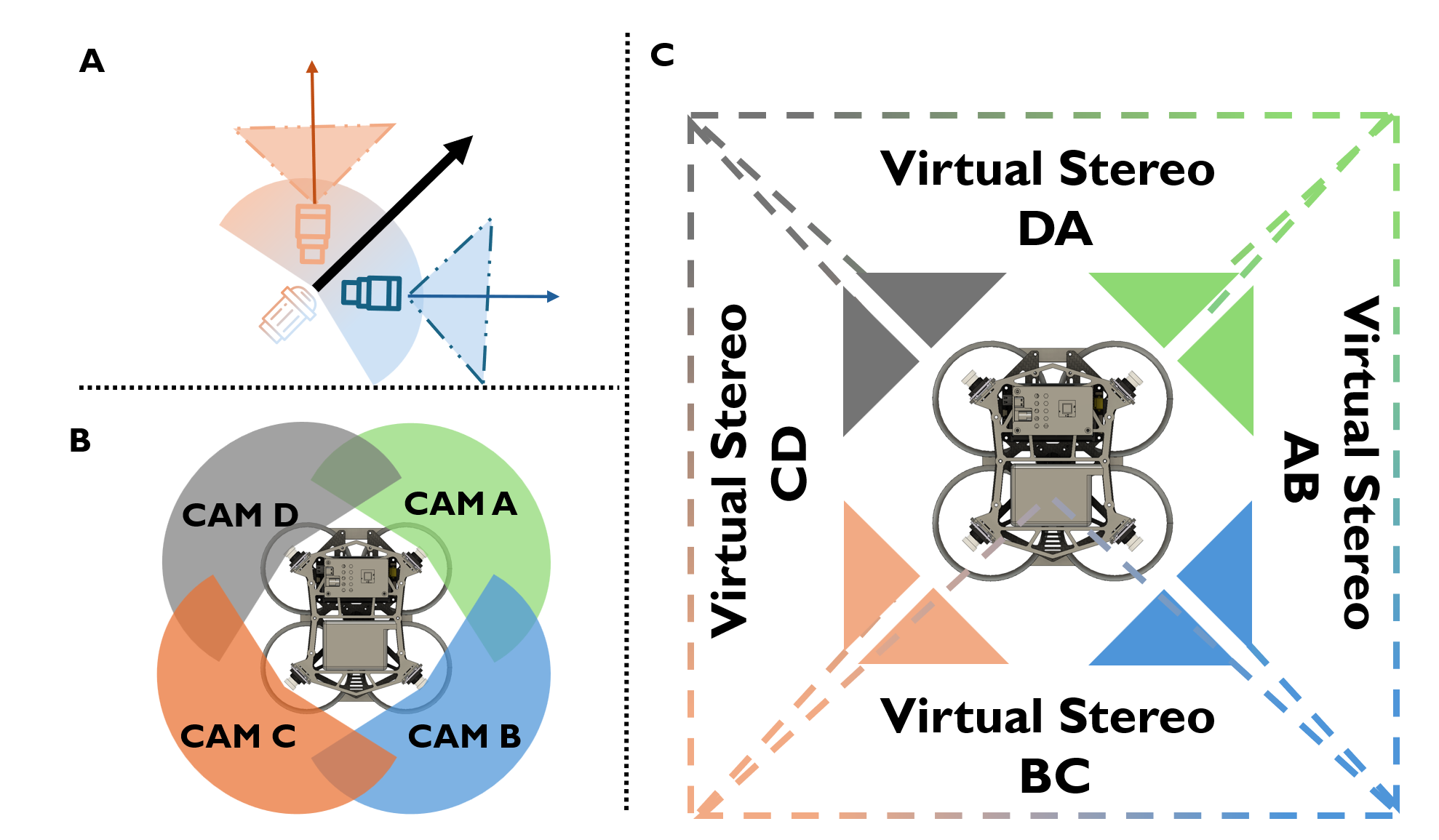

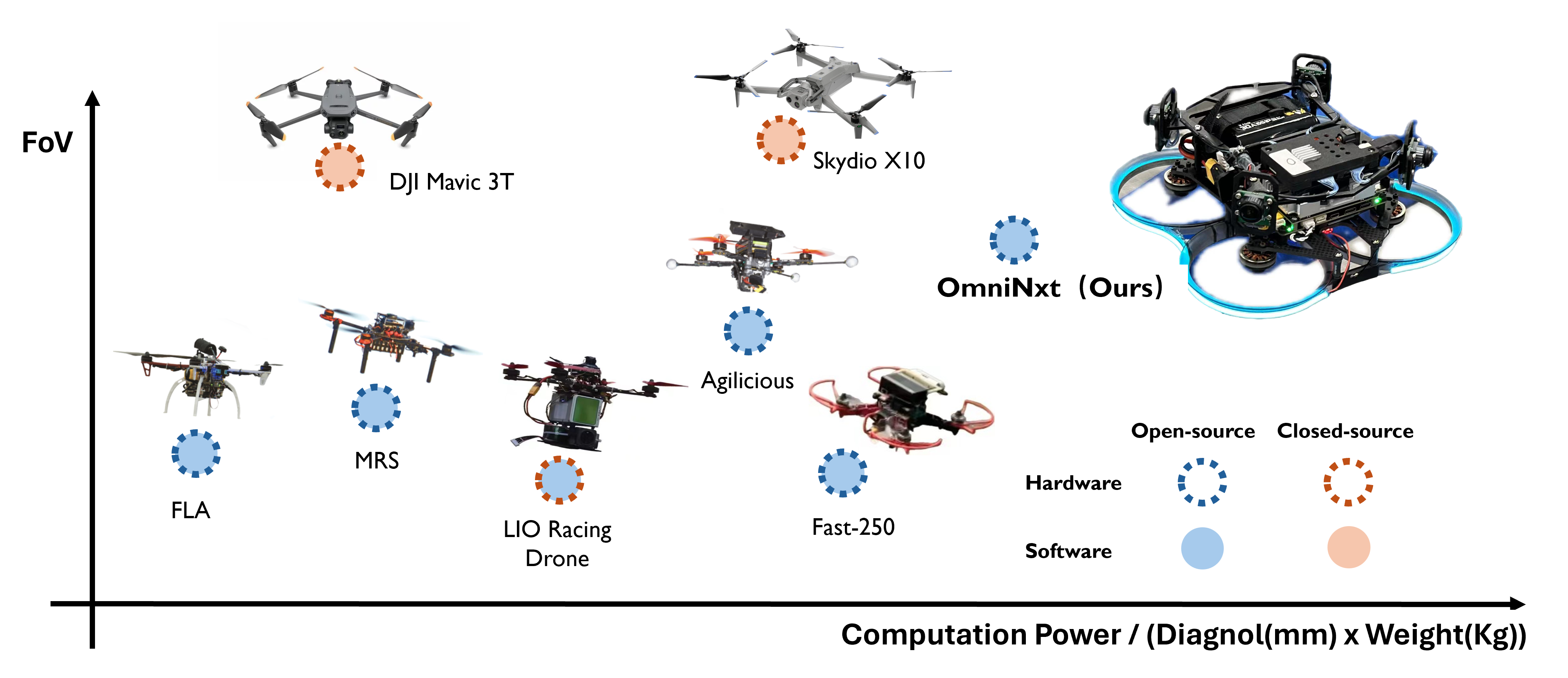

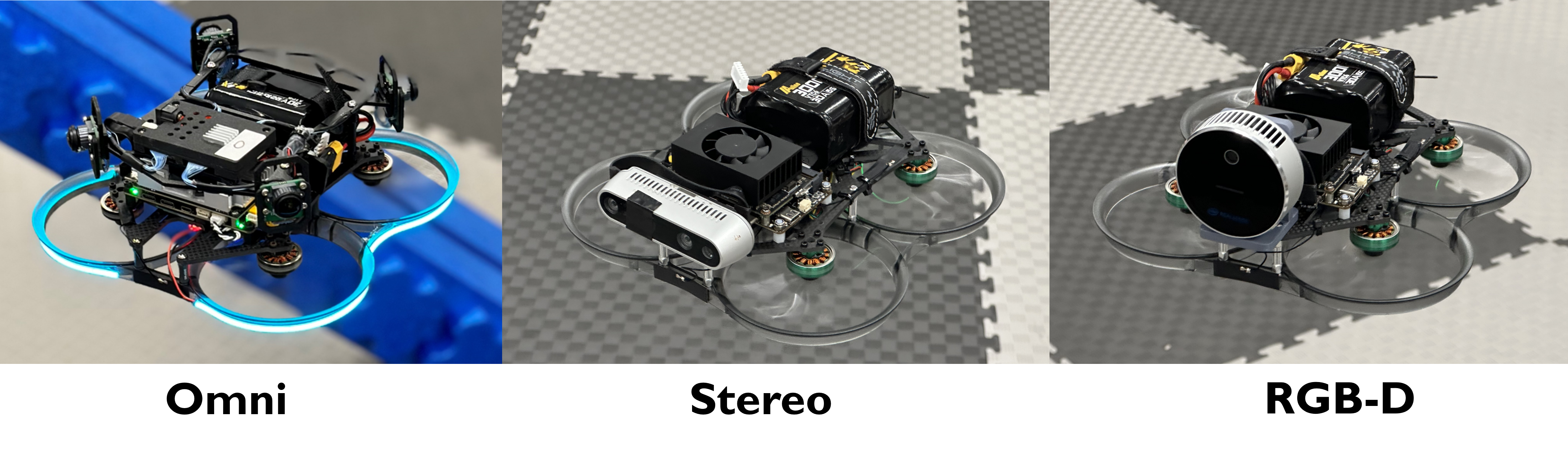

OmniNxtAdopting omnidirectional Field of View (FoV) cameras in aerial robots vastly improves perception ability, significantly advancing aerial robotics's capabilities in inspection, reconstruction, and rescue tasks. However, such sensors also elevate system complexity, e.g., hardware design, and corresponding algorithm, which limits researchers from utilizing aerial robots with omnidirectional FoV in their research. To bridge this gap, we propose OmniNxt, a fully open-source aerial robotics platform with omnidirectional perception. We design a high-performance flight controller NxtPX4 and a multi-fisheye camera set for OmniNxt. Meanwhile, the compatible software is carefully devised, which empowers OmniNxt to achieve accurate localization and real-time dense mapping with limited computation resource occupancy. We conducted extensive real-world experiments to validate the superior performance of OmniNxt in practical applications. All the hardware and software are open-access at https://github.com/HKUST-Aerial-Robotics/OmniNxt, and we provide docker images of each crucial module in the proposed system. Project page: https://hkust-aerial-robotics.github.io/OmniNxt.

@article{liu2024omninxt,

title={OmniNxt: A Fully Open-source and Compact Aerial Robot with Omnidirectional Visual Perception},

author={Liu, Peize and Feng, Chen and Xu, Yang and Ning, Yan and Xu, Hao and Shen, Shaojie},

journal={arXiv preprint arXiv:2403.20085},

year={2024}

}